This post is part of the AI Apprenticeship series:

- Part 1: AI Apprenticeship 2024 @ DiploFoundation

- Part 2: Getting introduced to the invisible apprentice – AI

- Part 2.5: AI reinforcement learning vs human governance

- Part 3: Crafting AI – Building chatbots

- Part 4: Demystifying AI

- Part 5: Is AI really that simple?

- Part 6: What string theory reveals about AI chat models

- Part 7: ‘Interpretability: From human language to DroidSpeak’

- Part 8: ‘Maths doesn’t hallucinate: Harnessing AI for governance and diplomacy’

By Dr Anita Lamprecht, supported by DiploAI and Gemini

Inspired by the AI Apprenticeship online course, I wanted to write a post on the relationship between AI reinforcement learning and human governance. By analysing their similarities and differences, we can better understand the potential impacts of AI.

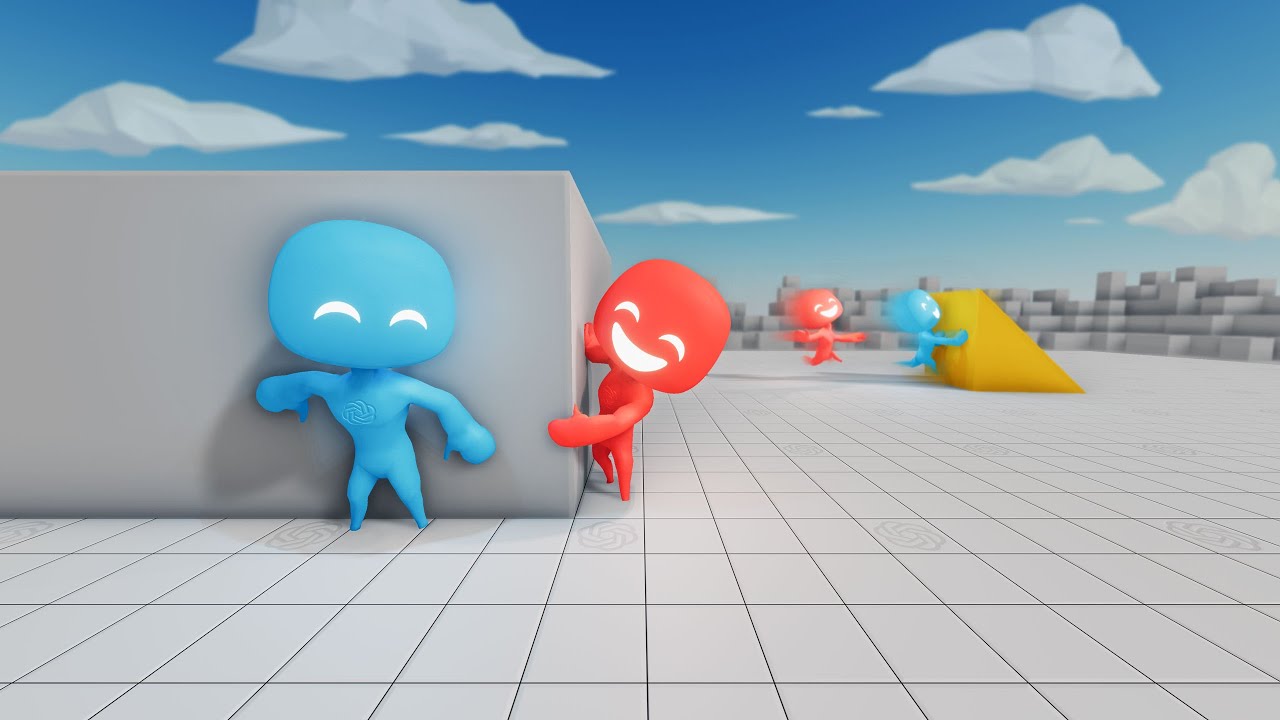

Reinforcement learning in hide-and-seek

Reinforcement learning (RL) is a subset of machine learning where agents learn to optimise their behaviour through trial and error. A fascinating application of RL is seen in the game of hide-and-seek, where agents learn complex strategies to either hide or seek effectively. This game, often used as a benchmark in AI research, demonstrates how agents develop complex strategies and adapt to dynamic environments, mirroring certain aspects of human learning and decision-making processes.

In a study conducted by OpenAI, agents were placed in a virtual environment with movable objects, and were tasked with playing hide and seek. The agents used RL to develop strategies over millions of iterations. The hiders learned to block entrances with objects to create safe zones, while the seekers learned to use ramps to overcome obstacles. This emergent behaviour demonstrates the power of RL in discovering complex strategies from simple rules.

Human governance and behavioural regulation

Human governance involves establishing rules, norms, and institutions to regulate behaviour within societies. Unlike RL, which relies on computational algorithms to optimise behaviour, human governance is a complex interplay of cultural, legal, and ethical considerations. Different societies may adopt varying governance models, from democratic systems emphasising citizen participation to more centralised structures, each with its own power dynamics and cultural values.

Governance structures are designed to maintain order, protect rights, and promote welfare, often requiring consensus and compliance from the governed population. Governance systems are typically evaluated based on their effectiveness in achieving societal goals, such as justice, security, and economic prosperity. These systems rely on a combination of incentives and deterrents, similar to the reward and penalty system in RL, to influence behaviour. However, human governance also involves negotiation, persuasion, and the balancing of competing interests, which adds layers of complexity not present in RL environments.

Comparison of learning and adaptation

Both RL in hide-and-seek and human governance involve learning and adaptation, but they differ significantly in their mechanisms and outcomes. In RL, learning is driven by a clear, quantifiable reward function, and adaptation occurs through trial and error over numerous iterations. The agents in hide-and-seek adapt by exploring different strategies and retaining those that maximise their reinforcing feedback.

In contrast, human governance involves learning through historical experience, cultural evolution, and institutional development. Adaptation in human governance is often slower and more deliberate, as it requires changes in laws, policies, and social norms. The feedback mechanisms in governance are less direct and quantifiable than in RL, often involving complex social dynamics and political processes. These feedback loops can range from election results and public opinion polls to social movements and protests, shaping policies, and ensuring responsiveness to the needs of the population.

Emergent strategies and unintended consequences

One of the fascinating aspects of RL in hide-and-seek is the emergence of strategies that were not explicitly programmed. This emergent behaviour results from the agents’ interactions with their environment and each other, leading to innovative solutions to the challenges they face. For example, the hiders’ use of objects to block entrances was an emergent strategy that evolved from the basic rules of the game.

Similarly, human governance can lead to emergent behaviours and unintended consequences. Policies designed to achieve specific goals can have ripple effects throughout society, leading to outcomes that were not anticipated. For instance, raising the retirement age, while intended to address economic concerns related to an ageing population, might disrupt traditional family structures and caregiving arrangements. This shift could strain families and further increase existing inequalities, especially if the system lacks adequate and affordable childcare options or support services for elderly dependents. The complexity of human societies means that governance must be adaptive and responsive to these emergent challenges.

Innovation and the spectrum of hallucinations

The line between visionary thinking and hallucinations can be blurry for both humans and AI systems. For humans, this spectrum ranges from visionary eureka moments to distorted, delusional perceptions of reality, as well as creative explorations of fantasy. ‘Foresight’ is also on the spectrum, bridging visionary thinking and the potential for hallucinations. It is a method used to explore future scenarios, and is currently a highly sought-after skill. Foresight is one of the five essential skills mentioned in the UN 2.0’s Quintet of Change, particularly because the needed innovations require exploring new ideas and going beyond the conventional.

AI agents operate differently from humans, particularly as they do not have inherent natural boundaries, such as common sense, cognitive limits, ethical considerations, or physical and biological constraints. This lack of human boundaries allows the system to deliver unexpected perspectives and results, so novel that their consequences might be unpredictable for us. We interpret the output as creative or even revolutionary if they sound good and promising, but label them as ‘hallucinations’ if they sound or prove to be wrong.

This potential for AI ‘hallucinations’ highlights the need for responsible AI use in governance, ensuring that AI-generated ideas, like human ideas, are evaluated and validated against human values to avoid unintended consequences. But is it truly accurate to speak of ‘hallucinations’, or are we once again falling for the trap of anthropomorphism by attributing human processes to AI, instead of staying with the more neutral metaphor of hide-and-seek?

Part of the game: Randomness and bias

It is fun to watch the AI agents play hide-and-seek. When walls and boundaries are removed, some agents simply run off into the infinite world of data. Will they ever return with any results? To prevent agents from getting lost in the vastness of information, the system introduces a trade-off: the probability for randomness. It is a core principle in RL and a challenge when designing AI systems: finding a balance between randomness (usually referred to as ‘hallucinations’) and directed exploration to produce usable results.

Regarding usability, both RL and human governance raise ethical considerations. In RL, bias in training data can lead to AI systems perpetuating societal biases. However, this bias can also be used positively to surface hidden societal biases, allowing for analysis and improvement. Indeed, it is often through exploring randomness that we uncover such biases, revealing hidden patterns and challenging our assumptions.

While for humans, bias is often ingrained and non-random, stemming from complex personal and societal factors, for AI, bias is a more direct reflection of the data it is trained on. This could make it easier to identify and address bias. In human governance, ensuring fairness and accountability are ongoing challenges. As AI plays an increasingly prominent role, it is essential to address these ethical implications proactively, ensuring that AI is used in a manner that aligns with human values and promotes well-being.

This leaves us with the question of how to use AI for human governance.

The AI Apprenticeship online course is part of the Diplo AI Campus programme.

Click to show page navigation!