Leave a Reply

Want to join the discussion?Feel free to contribute!

Dear colleagues,

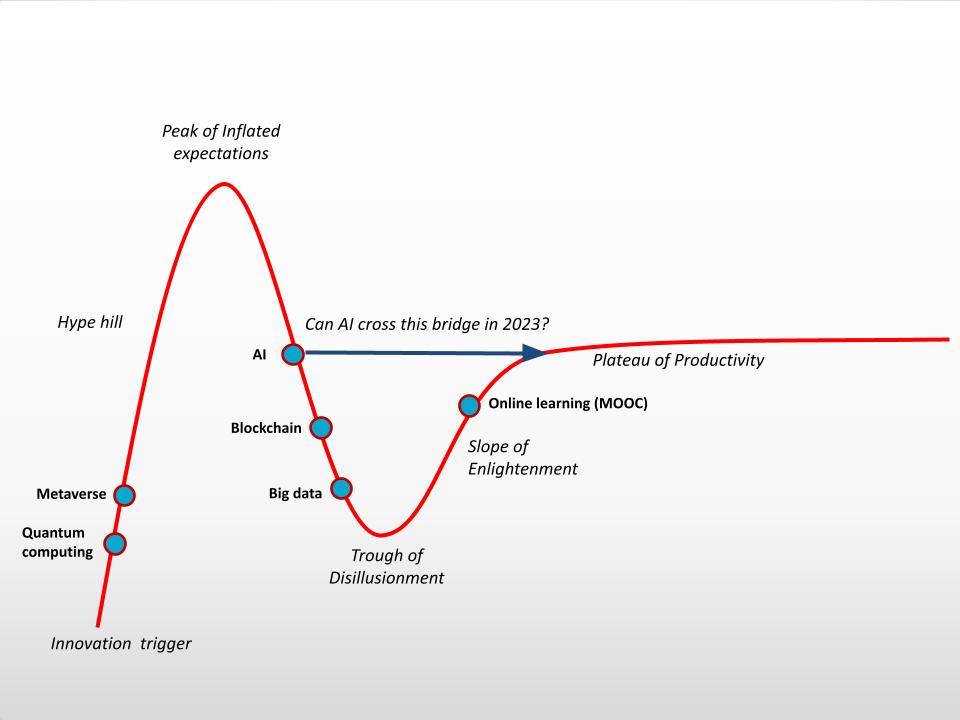

We are nearing the end of a year coloured by artificial intelligence (AI). After the November launch of ChatGPT, 2023 began with a winter of AI excitement, followed by a spring of metaphors and a summer of reflections. The autumn brought greater clarity on how to govern AI in an informed and inclusive manner.

While AI has received a lot of attention from the public and policymakers, other digital aspects of our daily lives should not be taken for granted. Despite all wars, conflicts, and tensions, the internet continued to function globally. Internet infrastructure remains robust. Thus, in this 2023 Recap, we will also revisit cybersecurity, e-commerce, standardisation, and other issues that are not in the spotlight but are still extremely important in the digital world.

Best wishes for 2024!

Jovan and the Diplo Team

P.S. Predictions for 2024 will be released early in the year and discussed at our Predictions event on 11 January 2024. You can register by clicking on the red button below.

2023 Recap of 12 AI and Digital Developments

Contents

ToggleFrom a Winter of excitement via a Spring of metaphors and a Summer of reflections to an Autumn of clarity

ChatGPT was the most impressive success in the history of technology in terms of user adoption. In only 5 days, it acquired 1 million users, compared to, for example, Instagram, which needed 75 days to reach 1 million users. In only two months, ChatGPT reached an estimated 100 million users.

The launch of ChatGPT last year was the result of countless developments in AI dating all the way back to 1956! These developments accelerated in the last 10 years with probabilistic AI, big data, and dramatically increased computational power. Neural networks, machine learning (ML), and large language models (LLMs) set the stage for AI’s latest phase, which brought tools like Siri and Alexa, and, most recently, generative pre-trained transformers, better known as GPTs, which are behind ChatGPT and other new tools.

ChatGPT started mimicking human intelligence by drafting our texts for us, answering questions, and creating images.

The powerful features of ChatGPT triggered a wave of metaphors in the spring of this year. Whenever we encounter something new, we tend to use metaphors and analogies to compare its novelty to something we already know.

Most AI is anthropomorphised and typically described as the human brain, which ‘thinks’ and ‘learns’. ‘Pandora’s box’ and ‘black box’ are terms used to describe the complexity of neural networks. As spring advanced, more fear-based metaphors took over, centred around doomsday, Frankenstein’s monster, and Armageddon.

As discussions on governing AI gained momentum, analogies were drawn to climate change, nuclear weapons, and scientific cooperation. All of these analogies highlight similarities while ignoring differences.

Summer was relatively quiet and a time to reflect on AI. Personally, I dusted off my old philosophy and history books to search for old wisdom to answer current AI challenges, which are far beyond simple technological solutions.

Under the series ‘Recycling Ideas’ I dove back into ancient philosophy, religious traditions, and different cultural contexts, from Ancient Greece to Confucius, India, and the Ubuntu concept of Africa, among others.

Clarity pushed out hype as AI increasingly made its way onto the agendas of national parliaments and international organisations. Precise legal and policy formulations have replaced the metaphorical descriptions of AI. In numerous policy documents from various groupings—G7, G20, G77, G193, UN—the usual balance between opportunities and threats has shifted more towards risks. Some processes, like the London AI Summit, focused on the long-term existential risks of AI.

The EU AI Act requires a more balanced approach between long-term and more immediate risks, including AI monopolies and the future of work and education. The UN’s Interim Report: Governing AI for Humanity added to more informed and nuanced discussion on AI risks. In search of inspiration for AI governance, many proposals mentioned the International Atomic Agency, CERN, and the International Panel on Climate Change.

In 2023, AI terminology has percolated into the daily language of societies worldwide. ChatGPT is becoming a synonym for communication with AI just as Google is a synonym for searching online.

AI terminology ranked high on selections of words of year: AI is selected by Collin’s Dictionary; hallucinate, related to AI, was chosen by the Cambridge Dictionary; prompt is the second ranking word by Oxford University Press; neural networks is word of the year in Russian selected by the Pushkin Institute.

At Diplo, we approach AI holistically by fostering balanced tech-human interplay through a cognitive proximity approach. On awareness building, we run a series of webinars: Will AI take over diplomatic reporting? and What is the (potential) role of AI in diplomatic negotiations? In our teaching activities, we discuss how ChatGPT can help us to rethink education. We also run a series of courses and training programmes on AI. Diplo’s AI team is experimenting with platforms for AI reporting and using smaller AI models such as LLaMA, which can be affordable for small organisations. If you want to learn more about the results of Diplo’s experience with the LLaMA model, please email us at diplo@diplomacy.edu.

Oscar for AI & 2023 Recap from Diplo Creative Lab:

Source: https://www.globalxetfs.com/

In addition to ChatGPT, several other notable generative AI models, such as Midjourney, Meta’s open-source Llama models, Baidu’s AI Ernie Bot and Google’s Bard and Gemini, have been released or updated.

Elon Musk and over 1,000 other technology leaders and researchers have called for a pause on the development of the most advanced AI systems, citing ‘profound risks to society and humanity’. In an open letter, they urged AI developers to pause training AI systems more powerful than GPT-4 for at least six months, initiating a broader debate about the potential risks of technology, with some even calling AI a threat to humanity.

In 2023 OpenAI, Stability AI, and Anthropic have been sued by writers and developers for copyright infringement. They claim these tools are built on massive data sets using their copyrighted content without their consent or compensation.

ChatGPT was briefly banned in Italy due to privacy concerns. Italy’s data protection watchdog accused OpenAI, the company behind ChatGPT, of unlawfully collecting personal data from users and not having an age-verification system in place. The company complied with watchdog requests, but it raised concerns about privacy.

OpenAI upgraded ChatGPT with web browsing capabilities. With the ability to browse the web, ChatGPT can now provide the latest information available on the internet, access a wider range of information, authoritative sources, and links to images and videos, and deliver more accurate and relevant responses to user queries.

The Sam Altman saga at OpenAI has been a rollercoaster ride, with Altman’s fate being a topic on everyone’s lips. On 17 November 2023, OpenAI’s board of directors dismissed Altman from his CEO position. However, this move triggered a strong response from OpenAI’s employees, who rebelled against the board’s decision. They threatened to defect to Microsoft to join Altman and co-founder Greg Brockman if they were not reinstated. It all culminated in an announcement on 21 November 2023, with Altman’s reinstatement as CEO.

The United States: On 30 October, President Biden issued an executive order mandating AI developers to provide the federal government with an evaluation of the data of their applications used to train and test AI, its performance measurements, and its vulnerability to cyberattacks. Read more in our Newsletter.

EU lawmakers reach a deal on AI Act: The EU has reached a provisional agreement on the AI Act. The draft legislation still needs to go through a few steps for final endorsement, but the political agreement means its key elements have been approved – at least in theory. There’s quite a lot of technical work ahead, and the act does have to go through the EU Council, where any unsatisfied countries could still throw a wrench into the works. The act will go into force two years after its adoption, which will likely be in 2026. Read more in our Newsletter.

China: China was the first country to introduce its interim measures on generative AI, effective in August this year. What is the aim? To solidify China’s role as a key player in shaping global standards for AI regulation. China also unveiled its Global AI Governance Initiative during the Third Belt and Road Forum, marking a significant stride in shaping the trajectory of AI on a global scale. China’s GAIGI is expected to bring together 155 countries participating in the Belt and Road Initiative, establishing one of the largest global AI governance forums. Read more in our Newsletter.

The UN Security Council on AI: The UN Security Council held its first-ever debate on AI, delving into the technology’s opportunities and risks for global peace and security. In his briefing to the 15-member council, UN Secretary-General Antonio Guterres promoted a risk-based approach to regulating AI and backed calls for a new UN entity on AI, akin to models such as the International Atomic Energy Agency, the International Civil Aviation Organization, and the Intergovernmental Panel on Climate Change. Read more in our Newsletter and AI-generated summary of country positions, prepared by DiploGPT.

G7: The G7 nations released their guiding principles for advanced AI, accompanied by a detailed code of conduct for organisations developing AI. A notable similarity with the EU’s AI Act is the risk-based approach, placing responsibility on AI developers to assess and manage the risks associated with their systems. Read more in our Newsletter.

UK AI Safety Summit: The UK’s summit resulted in a landmark commitment among leading AI countries and companies to test frontier AI models before public release. The Bletchley Declaration identifies the dangers of current AI, including bias, threats to privacy, and deceptive content generation. While addressing these immediate concerns, the focus shifted to frontier AI—advanced models that exceed current capabilities—and their potential for serious harm. Read more in our Newsletter.

The UN’s High-Level Advisory Body on AI: The UN has launched a High-Level Advisory Body on AI comprising 39 members. Led by UN Tech Envoy Amandeep Singh Gill, the body published its first recommendations in December (Interim Report: Governing AI for Humanity), with final recommendations expected next year. These recommendations will be discussed during the UN’s Summit of the Future in September 2024.

The UN GA Resolution on Lethal Autonomous Weapons Systems or LAWS: On 22 December, the UN General Assembly adopted a resolution aimed at advancing international regulations concerning weapons utilising AI (in war) or Lethal Autonomous Weapons Systems (LAWS). This resolution seeks to address the development of weaponry capable of autonomously selecting human targets without human intervention.

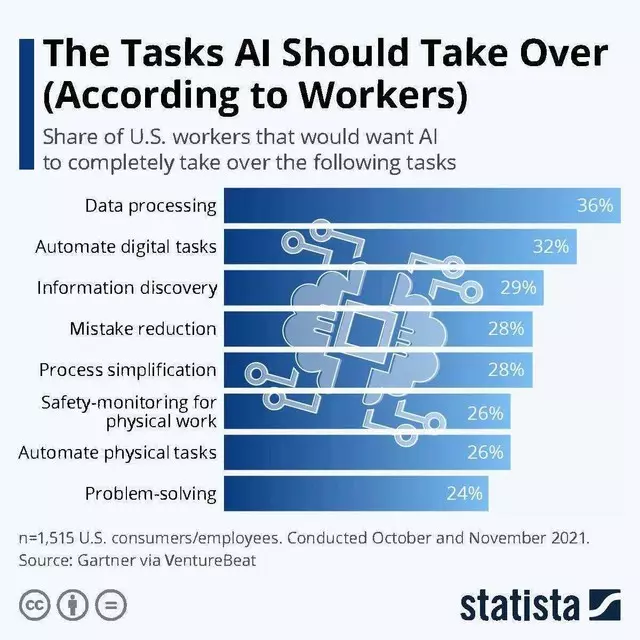

AI has entered daily life and continues to challenge existing systems in new and more visible ways. Take OpenAI’s recently launched ChatGPT model: it may be fun to play with, and it surely is impressive, but it is also challenging for educational systems (with professors now trying to detect AI-written texts instead of plagiarised ones), and raising new questions about the protection of intellectual property rights. How prepared are we to deal with these and similar issues? How can existing AI governance address new AI challenges?

As AI becomes more prevalent in everyday life, questions about how to govern it will become more important. The growing practical relevance of AI will also shift focus from general discussions on ethics (i.e. how to ensure that AI solutions are developed and used in line with ethical principles) to more hands-on issues such as, for example, adjusting pedagogy and educational policies to the possibility that AI can draft students’ assignments and theses. Similar examples of AI-driven policy changes could be identified across economic, cultural, legal, and other fields.

The good news is that we will not need to start from scratch. There are numerous ongoing national, regional, and global policy and regulatory processes.

In 2023, Europe will keep its tradition of being a trendsetter in digital governance (think of data, cybersecurity, and anti-monopoly) by advancing work on the EU’s draft AI Act and the Council of Europe’s Committee on AI’s work on a draft convention on AI and human rights.

Within the EU, there’s a good chance that the AI Act may be adopted in early 2024. It will all depend on how fast the European Parliament, the Council of the EU, and the European Commission can come to an agreement on contentious points. As the negotiations move forward, many digital actors will step up their lobbying in Brussels because the EU regulation could have effects outside of the EU, like the GDPR. The AI Act could also create tensions with the USA, a strong proponent of self-regulation.

At the Council of Europe, the Committee on AI (CAI) has started discussing a Zero Draft [Framework] Convention on Artificial Intelligence, Human Rights, Democracy and the Rule of Law. The draft presented during the Committee’s latest meeting in September 2022 is not yet public, but the European Commission—party to the discussions—has revealed that it covers provisions related to:

The drafting and negotiation process will advance in 2023 with two CAI meetings: 11–13 January and 1–3 February.

There’s a potential overlap between the two processes, as a recent decision of the Council of the EU indicated: ‘ The convention overlaps to a large extent with [the AI] legislative proposal in its scope since both instruments aim to lay down rules applicable to the design, development, and application of AI systems, provided and used by either public or private entities.’ To resolve this, the Commission has been mandated by the Council to represent the EU in CAI negotiations on ‘matters falling within the exclusive competence of the Union, in order to protect the integrity of Union law and to ensure that the rules of international law and Union law remain consistent’. It remains to be seen how the two parallel processes will evolve and how potential overlaps will be tackled.

It is difficult to predict exactly what AI governance will look like in 2023, as it will depend on a variety of factors such as technological developments, economic conditions, and political and social changes,’ ChatGPT responded.

However, it is likely that AI governance will continue to be a topic of discussion and debate among governments, businesses, academics, and other stakeholders. There may be efforts to establish global or regional standards for the development and use of AI, as well as efforts to address concerns about the impact of AI on employment, privacy, security, and other issues.

It is also possible that AI governance frameworks will emerge at the national or local level, tailored to the specific needs and context of different countries or communities.

Dive deeper: AI and its policy implications

The US-China chip war has entered a new, debilitating phase. Image: Screengrab / News Video

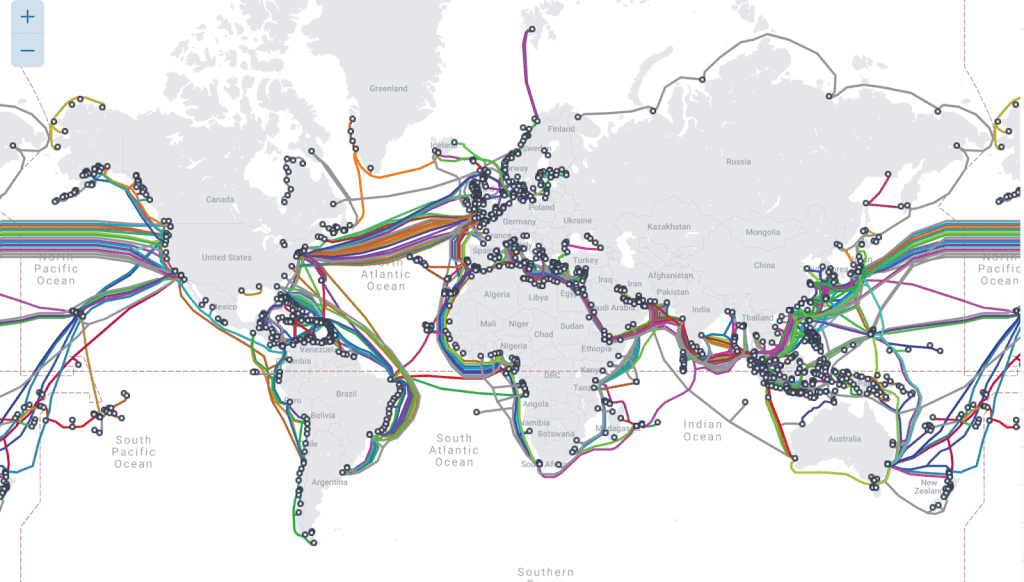

The main geopolitical development in 2023 was the digital decoupling between China and the United States. On microchips, the two sides will move further apart throughout the semiconductor supply chain, from design to manufacturing. The current shared infrastructure on submarine cables has begun to split into two systems. Outer space is becoming a commercial and, possibly, military battleground as well.

Digital geopolitical tensions are showing no signs of easing in 2023, especially between the USA and China. Worse, global conflicts and tensions could trigger the fragmentation of the internet. Digital geopolitics will be centred around the protection of submarine cables and satellites, the production of semiconductors, and the free flow of data.

Digital geopolitics will continue to evolve in 2023 as technology advances and the world becomes increasingly interconnected. We can expect to see more countries using digital tools to influence global politics, such as through cyber warfare, digital surveillance, and the use of artificial intelligence. We may also see the emergence of new digital powers, such as tech giants and other non-state actors, that will have an increasing influence on global politics. Additionally, digital geopolitics will likely become more complex as countries grapple with issues such as data privacy, cyber security, and the regulation of digital technologies.

Chips are the primary geopolitical battleground between China and the United States, with two key dynamics. The first is trade and restricting the export of chips and manufacturing equipment to China, particularly from the United States and its allies. Second, national capacity development for semiconductor design and manufacturing is being accelerated.

The most significant surprise occurred in 2023 when Huawei released the Mate 60 Pro phone. This phone is equipped with an advanced Kirin 9000S chip that is based on 7 nanometers technology, which was not something that was anticipated to be available in China. It is not entirely clear whether the sanctions regime that the United States has imposed on the technology of semiconductors is ineffective or whether China has independently developed new technologies for advanced semiconductors.

In this contest, the Netherlands has the most advanced semiconductors technology, including deep ultraviolet (DUV) lithography systems for the development of advanced microchips. China initiated a trade dispute procedure at the World Trade Organization (WTO) against US chip export control measures, arguing that these measures ‘threatened the stability of the global industry supply chains’.

Many countries are developing their national semiconductor capacities. Japan aims to treble sales of domestically made microchips by 2030. The EU set into motion a comprehensive microchip strategy around the EU Chips Act, which entered into force on 21 September 2023.

The USA and Canada have pledged more money for domestic semiconductor companies: The USA pledged US$50 million for the Defense Production Act that will fund advancing packaging for semiconductors and printed circuit boards, while Canada pledged up to CA$250 million for semiconductor projects from the Strategic Innovation Fund.

As North American countries make another move to reduce their reliance on Asian chipmakers, two other countries took steps in the opposite direction. A delegation of Czech politicians and company representatives hopes for Taiwanese investment in Czech chip technologies. Brazil will reportedly seek mainland Chinese technology and investment in developing its semiconductor industry.

China has undertaken ambitious initiatives – the ‘new whole-nation system.’ This program aims to bolster its semiconductor sector by leveraging state resources, diverting investments, and prioritising the development of cutting-edge technologies. Essentially the aim is to achieve self-reliance on advanced technology. As a response to the imposed US restrictions from October 2023, China’s trade council has urged a reconsideration of rules limiting American investments in the country’s tech sector. The council contends that these restrictions lack clarity in distinguishing between military and civilian applications. The council argues that the restrictions are vague and do not differentiate between military and civilian applications Simultaneously, Chinese tech firms like Baidu are diversifying their supply chains, seeking alternatives to reduce reliance on US-based semiconductor providers.

Semiconductors are at the centre of the geopolitical battle between the USA and China. The US approach of limiting China’s access to cutting-edge microchips and technology production started with President Trump and continued with the Biden administration. China will need years to put together the technology for the production of the next generation of more sophisticated semiconductors.

These geopolitical tensions are having a rippling effect:

Submarine cables became the focus of global interest following the Nord Stream blast. Cutting major submarine cables could create havoc in our digitally interdependent civilisation. In the same context, Prof. Jeffrey Sachs sent a warning message at the UN Security Council hearing on the risks for digital cables. NATO has also set up a Critical Undersea Infrastructure Protection Cell with one of the main aims to protect internet submarine cables.

Another area of increasing decoupling between China and the US is submarine cables. The US has declined landing rights for a few new submarine cables that have Chinese landing points or involve Chinese companies in cable laying or management. Following Chinese retaliation, it is very likely that two parallel submarine cable systems led by China and the United States will emerge.

In January, we said that outer space activities would likely get more attention in governance and diplomacy talks. And, indeed, quite a lot has been going on over the past 12 months. At the UN level, the Open-ended Working Group on reducing space threats (OEWG-Space) through norms, rules, and principles of responsible behaviour held its third session and saw member states discuss a wide range of issues, from preventing harmful interferences in space, to addressing threats against space objects (in particular satellites), and preventing an arms race in outer space.

At the UN Office for Outer Space Affairs, two new working groups were created to explore: (a) the status and application of the five UN treaties on outer space, and (b) the legal aspects of space resource activities. Meanwhile, space agencies and private actors are coming up with new initiatives and plans. Just a few examples: competition is picking up in low-earth orbit communication networks, as China’s Aerospace Science and Technology Corporation and Amazon’s project Kuiper are preparing to launch satellite constellations to compete with the services provided by the likes of Starlink and OneWeb. With the moon back in the focus of space exploration activities, plans are unfolding—within the European Space Agency (ESA) and US company Lockheed Martin, for instance—to deploy new communication networks around our earth’s natural satellite.

Satellites may offer some alternatives, but they can’t replace fibre as a global backbone. They have also been shown to be susceptible to geopolitics, such as the hacking of ViaSat or Musk’s pondering whether to continue the provision of Starlink services to Ukraine. Beyond the role that satellites play in giving people access to the internet or letting them talk to each other in an emergency, outer space activities are likely to get more and more attention in governance and diplomacy talks in the coming years. Issues related to frequency interferences, satellite collisions, the cyber-resilience and security of space services, space debris, the exploration of space resources, and the growing competition between states as well as between private actors will continue to grow in relevance, building on recent developments.

In 2022 we saw, for instance, a new ITU resolution calling for strengthened public-private cooperation in ensuring that ‘the benefits of space will be brought to everyone, everywhere’; the launch of a UN Open-ended working group on reducing space threats through norms, rules, and principles of responsible behaviours; and a growing number of countries joining the Artemis Accords (spearheaded by the USA, the Accords outline principles to enhance the governance of civil exploration and use of outer space). It is also telling that the 2024 Summit of the Future, called for by the UN Secretary-General, is expected to include a track on outer space, with the goal to ‘seek agreement on the sustainable and peaceful use of outer space’.

Dive deeper: Space diplomacy

Dive deeper: Data governance

The Metaverse is the biggest casualty of the generative AI ‘frenzy’ due to the tech sector’s overall shift to the development of AI. Even Meta which has invested billions in its Metaverse is shifting its focus on AI. Some analysts interpreted this as the start of Meta’s back-peddling from the Metaverse project. There are several reasons behind this, firstly some reports suggest that the VR market is shrinking, and other reports say that Meta’s flagship social VR app Horizon Worlds is failing to attract users.

The main reason behind the ‘failure’ or slow adoption of the metaverse concept is the lack of infrastructure – mainly quality internet connections (think of 5G), advanced chips that can support this vision, and affordability of devices and computing power.

In 2023, the focus of blockchain technology was mainly on its use as an underlying structure of the digital identity networks (digital ID). Meanwhile, quantum computing is getting ‘regular’ visibility.

Apart from AI, last year was technologically quiet. Metaverse technologies have lost momentum as a part of the overall tech sector crisis. Tencent Holdings abandoned its plans to enter the virtual reality hardware market, while Microsoft announced that it is closing its enterprise metaverse division. In a letter to his staff, Mark Zuckerberg signalled reduced support for the Metaverse as their ‘single largest investment is in advancing AI and building it into every one of our products’.

It is noteworthy that the development of the metaverse has garnered greater attention from Asian governments in comparison to the rest of the world, particularly in China and South Korea. These countries are exploring the potential of metaverse technology beyond gaming, with applications in industry and public administration. South Korea’s Ministry of Science and ICT has a Metaverse Fund to drive metaverse initiatives in the country, and Seoul officially launched its metaverse platform. Meanwhile in China, Shanghai, and Zhejiang have implemented metaverse development strategies in 2022.

Apple, in mid-2023, launched its Vision Pro mixed-reality headset that seamlessly blends digital content with the physical world. The headset is designed to cater to both virtual and augmented reality experiences, and it allows users to switch between AR and VR. Meta’s Ray-Ban smart glasses gained the most media attention. These smart glasses are a next-generation wearable tech device that integrate AI technology, making them more intelligent than previous models and introducing many new features.

The first ITU Forum on embracing the metaverse took place on 7 March 2023 in Riyadh, Saudi Arabia and begin ITU’s endeavour to promote metaverse pre-standardisation initiatives. The forum’s objective is to facilitate global dialogue on the metaverse, provide inputs, and discuss relevant topics that can aid the work of the newly established ITU Focus Group on the metaverse.

The ITU Focus Group on metaverse which was established in late 2022 held its first meeting in March 2023. This group aims to develop a roadmap for setting technical standards to make metaverse services and applications interoperable, enable a high-quality user experience, ensure security, and protect personal data.

The European Commission has adopted a strategy for Web 4.0 and virtual worlds. The EU strategy aims to steer the next technological transition and ensure an open, secure, trustworthy, fair, and inclusive digital environment. The strategy aims to empower people and reinforce skills, support a European Web 4.0 industrial ecosystem, promote virtual public services, and shape global standards for open and interoperable virtual worlds. The Commission believes that Web 4.0 will be the next generation of internet infrastructure which will allow integration between digital and real objects and environments

China’s Ministry of Industry and Information Technology (MIIT) revealed plans to create a working group dedicated to defining standards for the metaverse. This initiative aims to expedite the process of standardizing the concept of the metaverse. MIIT emphasized the importance of keeping pace with global advancements in this field to maintain alignment with international developments.

The US Federal Communications Commission (FCC) has given the green light to a new class of very low-power devices, including augmented reality (AR) and virtual reality (VR) headsets, to utilise the 6 GHz. This development is expected to have a tangible impact on the technology market by enabling faster data transfers and minimizing interference. For several years, major tech companies, including Apple, Google, Microsoft, and Meta, have been advocating for access to the 6GHz band.

Blockchain technology was used in 2023 mostly as an underlying structure of the digital identity networks (digital ID) which were launched extensively throughout 2023.

Apart from government approved solutions, the digital identity network Worldcoin created by the OpenAI CEO Sam Altman caught most of the world’s attention. Several countries worldwide expressed concerns regarding the Worldcoin project and issues surrounding the privacy of the biometric data collected for the purpose of authentication.

New forms of open blockchains were tested by the finance industry in an effort to make payments more transparent and trustworthy for everyone online. Open permissionless blockchain Solana was used in a pilot case by Visa. Solana is a second class of permissionless blockchains which enables a scaling solution to achieve massive transactions. Digital Collectibles or Non-fungible Tokens (NFTs) were less in the focus in 2023. In fact, regulators’ scrutiny didn’t miss this new market. Some famous celebrities and public figures were indicted for promotion of NFTs. US regulators saw this behaviour as an unregistered offering of crypto asset securities.

Last year started with a few big promises. Web 3.0, the metaverse, and AI in decentralised blockchain networks promised us astonishing feats. Enthusiasm dwindled towards the end of the year (with the exception of AI’s steady momentum).

This year started without any announcements about the next big thing in technology. This will create the space to take a step back and see how we would like to shape our digital future.

The metaverse tops the list of the buzzwords in 2022, courtesy of Meta CEO Mark Zuckerberg’s vision announced at the end of 2021. Fast forward to January 2023: The metaverse is not taking off the way it was envisaged by Meta, formerly Facebook, which centred its future business model around the metaverse.

The initial monthly target of 500,000 active users on Horizon Worlds—Meta’s metaverse platform—was reduced to 280,000 users. Currently, there are fewer than 200,000 active users per month. Some of Horizon’s virtual spaces have never been visited.

Yet, we feel this slow down is temporary. Heavyweights Microsoft, Apple, and Google are also heavily investing in metaverse applications and tools. A new generation of users with gaming experience will dominate the internet population in the coming years. In the long-term, the metaverse or virtual/extended/augmented realities are here to stay, and 2023 will be the year for background developments and regrouping, ahead of future growth in the metaverse, VR, and AR in the medium- and long-term.

Blockchain suffered a negative spillover from the recent troubles on the cryptocurrency market. The demise of FTX showed how the technical architecture of the blockchain can be abused to achieve the exact opposite of its proclaimed benefits.

Blockchain technology’s potential for decentralisation can be easily transformed into centralised control by those who control access to blockchain-driven platforms and services. This is what happened with tech platforms, which ended up dominating the internet market despite the decentralised technical design of the internet as a network of networks. Whether the same will happen with the blockchain remains to be seen in the coming years.

Last but not least, AI is gaining maturity both in the realisation of AI potential and governance. At the end of 2022, Lensa and ChatGPT created new possibilities for generating text and images. In 2023, AI will have to move towards productive use, requiring less technology and more organisational and management changes for optimal interaction between humans and machines.

Digital technology is expected to continue to evolve rapidly over the next few years. In 2023, we can expect to see further advances in artificial intelligence, machine learning, and natural language processing. We may also see the emergence of new technologies such as quantum computing, blockchain, and 5G networks. Additionally, digital technology will continue to be used to improve healthcare, education, and other areas of society. Finally, digital technology will continue to be used to create new products and services that make our lives easier and more efficient.

Dive deeper: Metaverse | Blockchain | AI

IBSA, which stands for India, Brazil, and South Africa, is a group of countries and developing economies with vibrant digital scenes. They are active supporters of multilateral and multistakeholder approaches, with many examples of inclusion of the tech community, academia, the private sector, civil society, local communities, and other actors in digital governance.

In 2023, IBSA countries have taken an active role in global digital development and governance. One example of IBSA’s “third-way” approach to global digital developments is the India-sponsored DPI initiative. At the G20 Summit in September, G20 leaders officially recognised the benefits of digital public infrastructure (DPI) that is ‘safe, secure, trusted, accountable, and inclusive’ – the first-ever multilateral consensus on DPI and its outcomes.

With the G20 summit endorsing India’s DPI framework and its plan for a global repository for DPI products, digital public infrastructure went from being a niche term just a year or two ago to a globally recognised driver of economic development.

This historic recognition and creation of a global repository for digital public infrastructure was closely followed by the creation of a Social Impact Fund to advance DPI in the Global South, and the One Future Alliance (OFA). India now supports the development of digital public infrastructure in Low- and Middle-income Countries (LMICs), through numerous initiatives noted in the the Bengaluru declaration of G20 Digital Ministers.

India further accelerated IBSA digital momentum when it offered developing countries such as the Philippines, Morocco, Ethiopia, and Sri Lanka the Modular Open Source Identity Platform (MOSIP), an export version of Indian identity system Aadhar. The DPI sharing consists of a triad of identity, payment, and data management applications.

IBSA countries will remain relevant as Brazil assumed the presidency in March, celebrating the 20th anniversary of the dialogue forum, and is set to take the presidency of the G20 Forum in 2024.

India, Brazil, and South Africa (IBSA)—which collaborate together through the IBSA Forum—are likely to play a prominent role in the process of reforming digital governance.

There’s momentum around the three D’s: the trio are developing economies, functional democracies, and supporters of multilateral diplomacy.

The first tangible results from IBSA’s digital momentum could be expected during India’s G20 presidency, which, among others, will promote ‘a new gold standard for data’.

Digitalisation is the engine of growth in IBSA economies. Among the three countries, India is the leader, with a vibrant digital economy. In all three, future digital growth will happen due to their large and young populations and economic dynamics.

But digitalisation also tends to exacerbate major societal tensions, which these countries face, including the digital divide, and the need to have digital governance that will reflect local cultural, political, and economic specificities.

The three countries have spearheaded digital inclusion by prioritising affordable access to citizens, by supporting training for digital skills, and by a legal framework for the growth of small digital enterprises. For example, India’s Aadhaar biometric ID system is seen by many as a leading digital identity initiative, inspiring similar systems in other countries. South Africa has been a leader in the inclusion of women and youth. Brazil works hard with other marginalised groups, from people with disabilities to indigenous people.

On data and sustainable development, India’s G20 presidency aims for strategic leadership with the following practical initiatives: (a) self-evaluation of the national data governance architecture, (b) the modernisation of national data systems to incorporate citizen preferences regularly, (c) transparency principles for governing data. With a large population, IBSA countries also see data as a national resource. The Indian G20 presidency’s calls for ‘a new gold standard for data’ can help reconcile the competing issues around the free flow of data and data sovereignty.

India, Brazil, and South Africa are all working democracies with regular elections and strong civil society scenes. In India, it was a civil society initiative of over 1 million signatures that blocked Facebook’s Free Basics project and helped preserve net neutrality.

Brazil has pioneered a unique national multistakeholder model around the Internet Governance Steering Committee (CGI.br). South Africa has had major successes in youth and female inclusion in digital processes on a national level.

Like other countries, the IBSA trio also have to deal with the digital aspects of their societal and political problems. India has had the highest number of internet shutdowns in the past few years. Brazil has witnessed major misuse of social media platforms during elections. South African women experienced high levels of online violence.

Digital problems are addressed and discussed in media, civil society spaces, and parliaments in India, Brazil, and South Africa.

India, Brazil, and South Africa are supporters of multilateral diplomacy. As members of various international coalitions, processes, and organisations, they have a strong convening capacity. They can also bring about larger regional and global partnerships by engaging countries with similar digital strengths and problems.

India and Brazil have both hosted meetings of the UN’s Internet Governance Forum (IGF), and both support policy inclusion of academia, civil society, business, and other significant actors. Brazil hosted the 2014 NetMundial meeting, a unique experiment in multistakeholder decision shaping.

South Africa has been a relatively active actor in global internet governance, from a very prominent role in WSIS negotiations, to engagement in several digital-related processes and discussions such as the OEWG, the Ad-hoc Committee on Cybercrime, UNESCO’s work on the recommendation on ethics and AI, and UN Human Rights Council debates on digital rights.

Many countries, such as Indonesia and Singapore in Asia, Mexico and Argentina in Latin America, and Nigeria, Kenya, and Rwanda in Africa, share IBSA concerns and approaches to digital governance.

IBSA (India, Brazil, South Africa) is a trilateral partnership between the three countries that was established in 2003. The partnership has been working to promote cooperation in areas such as trade, investment, and technology. In terms of digital governance, IBSA has been actively involved in initiatives such as the Global Forum on Cyber Expertise (GFCE), which is a platform for international cooperation on cyber security and digital governance. IBSA has also been involved in the development of the Global Digital Governance Agenda, which is a framework for international cooperation on digital governance issues. Additionally, IBSA has been working to promote the adoption of digital technologies in the three countries, such as through the establishment of the IBSA Digital Innovation Hubs.

The Global Digital Compact (GDC) process has started picking up momentum, with a rather packed schedule of consultations and thematic deep-dives. The topics selected for these deep dives offer a glimpse into what the GDC is expected to cover (from connectivity and digital inclusion, to sustainable development and AI).

At the same time, countries’ positions are shaping up: G77 and China, for example, want the GDC to ‘focus on the pressing need to address the major structural impediments that developing countries face in engaging with and accessing new and emerging technologies’, while ‘avoiding duplication across various entities and processes related to global digital cooperation’. The EU expects the GDC to ‘serve as a roadmap for a human-centric, global digital transformation’.

GDC saw several milestones this year. Rwanda and Sweden, as co-facilitators, announced the roadmap for the GDC process in January which was further updated in May, and we also saw other regions shaping up their positions.

The process saw two main documents delivered this year. In May, the UN Secretary-General issued a policy brief for the GDC, outlining eight areas in which ‘the need for multistakeholder digital cooperation is urgent’ and could help advance digital cooperation: digital connectivity and capacity-building; digital cooperation to accelerate progress on the SDGs; upholding human rights; an inclusive, open, secure and shared internet; digital trust and security; data protection and empowerment; and agile governance of AI and other emerging technologies.

The most notable proposal is to establish the annual Digital Cooperation Forum to be convened by the Secretary-General. It is expected to facilitate collaboration across digital multistakeholder frameworks and reduce duplication, promote cross-border learning in digital governance, and identify policy solutions for emerging digital challenges and governance gaps. The brief also suggested establishing a trust fund to sponsor a Digital Cooperation Fellowship Programme to enhance multistakeholder participation.

In September co-facilitators Rwanda and Sweden released an Issues Paper outlining their assessment of the deep dives and consultations conducted in relation to the GDC.

The GDC is moving from multistakeholder consultation to multilateral negotiations. The policy brief proposed several mechanisms.

We also witnessed the energising effect of the GDC process on different communities, encouraging stakeholder involvement at the IGF 2023 during the main Session on GDC: A Multistakeholder Perspective and garnering support at the UNGA78.

Framed by the outcomes of the 2023 IGF, the GDC is to be agreed upon in the context of the Summit of the Future in 2024 and is expected to ‘outline shared principles for an open, free and secure digital future for all’. For the upcoming year, permanent representatives of Sweden and Zambia to the UN were appointed as co-facilitators of the GDC process.

In 2023, digital cooperation processes will accelerate the build-up for 2025 when the World Summit on the Information Society (WSIS) implementation will be revisited, including the future of the Internet Governance Forum (IGF). In 2025, UN cybersecurity discussions will evolve from the OEWG towards the UN Programme of Action (PoA).

The next few years will also bring digital cooperation around the Agenda 2030 closer as digitalisation will become critical for the realisation of the 17 sustainable development goals (SDGs). An important stop on the way to 2025 will be the adoption of the Global Digital Compact (GDC) during the UN Summit of the Future in 2024.

The year 2025 will be significant for the World Summit on the Information Society (WSIS) process. As the implementation of WSIS outcomes is revisited, so will the future of the Internet Governance Forum (IGF).

At the start of 2023, the IGF will provide input into the Global Digital Compact (GDC) process, building on the messages from IGF 2022. Japan, as the host of the next IGF (Kyoto, October 2023), is likely to give new life to the Osaka Track on Data Governance, initiated during Japan’s G20 presidency in 2019.

Also worth keeping an eye on in 2023 will be the work of the IGF Leadership Panel. Appointed in 2022, the panel is expected to help raise the visibility of the IGF and ‘provide strategic input and advice‘ on the forum.

With the IGF’s current 10-year mandate coming to an end in 2025, we will most likely see discussions about the future of the forum accelerating, including its interplay with the work of the Office of the UN Secretary-General’s Envoy on Technology.

As for the Global Digital Compact process, facilitated by Rwanda and Sweden together with the UN Secretary-General’s Tech Envoy, the multistakeholder consultations will be followed by discussions at a ministerial meeting in September 2023 (dedicated to preparing the 2024 Summit of the Future).

Throughout 2022, many questions were raised about the GDC process and the compact itself:

At least some questions should begin to be answered in 2023.

Two challenges for international organisations and digital transformation

International organisations enter the phase of profound changes in their respective policy fields. One of the UN Tech Envoy’s tasks is to help the digital transition of the UN system. Two main challenges will top the agenda: mainstreaming of digital transformation and holistic digital policy making.

At a time when everything is becoming digital, mainstreaming of digital transformation comes high on the list of priorities of international organisations. E-trade is becoming just trade. Digital health is just health. Cybersecurity is core security. Digital mainstreaming will impact the modus operandi of international organisations.

Holistic digital policy making will reflect the multifaceted nature of digital issues. For example, should data be addressed as a technical, legal, economic, security, or sociocultural issue? AI is an even more multifaceted issue with blurred lines between different policy angles. Currently, more and more international organisations are covering such issues, trying to position themselves as strong actors in what could be seen as a ‘competence competition’.

AI, for example, is covered by ITU, the UN Educational, Scientific and Cultural Organization (UNESCO), the Human Rights Council, the Group of Governmental Experts on Lethal Autonomous Weapons Systems (UN GGE on LAWS), etc. While there is justification for specific policy angles covering AI and data, the main challenge will be to at least avoid governance confusion and at best trigger policy cross-fertilisation.

Digital cooperation is expected to continue to grow and evolve in 2023. We can expect to see more collaboration between businesses, governments, and individuals, as well as increased use of digital tools and platforms to facilitate communication and collaboration. We can also expect to see more use of artificial intelligence (AI) and machine learning (ML) to automate processes and improve efficiency. Additionally, the development of blockchain technology is likely to have a major impact on digital cooperation, allowing for secure and transparent transactions between parties. Finally, the development of 5G networks will enable faster data transfer speeds, allowing for more efficient digital cooperation.

Dive deeper: IGF 2022 Summary Report | GDC process

In 2023, attacks on human rights that happened offline had an effect on human rights online, especially as the number of wars and conflicts grew. An increasing number of shutdowns impeded individuals’ right to access the internet. AI models and how they breach data and privacy protection gave privacy protection a new boost.

It’s important to remember that AI also had an effect on the press in 2023. The 2023 World Press Freedom Index found that the amazing progress in AI is shaking up the media world even more.

In 2023, the protection of privacy and personal data in the digital space was at the centre of multiple digital policy developments around the world. We have already mentioned above the (relatively) new concerns about the implications of generative AI for privacy and data protection – something that more and more privacy watchdogs are looking into. Besides this, there have been several regulatory, enforcement, and self-regulatory developments worldwide, from India’s and Saudi Arabia’s initiatives to strengthen the legal protections for personal data, through Ireland fining Meta €5.5 million for data breaches, to Google banning personal loan apps from accessing sensitive data. On other fronts, the EU and Japan have concluded the first review of their Mutual Adequacy Arrangement on personal data protection, and the UN Special Rapporteur on privacy has called on governments to delete personal data collected during the COVID-19 pandemic.

International Women’s Day in early March brought important attention to gender rights. Some of the highlights included debates on the (potential) role of AI in achieving gender equality, the launch of UNESCO’s Women 4 Ethical AI platform, calls to accelerate progress towards bridging digital gender gaps and to meaningfully consider gender issues in the development of the Global Digital Compact, and the release of a new report on the digital targeting of LGBT people.

Privacy and inclusion—the two issues we have explored above—were also highlighted in the Ibero-American Charter of Principles and Rights in Digital Environments, adopted by the Organization of Ibero-American States. The charter outlines ten principles to guide meaningful digital inclusion, from privacy and security, to fairness and inclusiveness. Another multilateral initiative that saw the light of day recently is the Guiding Principles on Government Use of Surveillance Technologies, a non-binding instrument developed by the 36 member states of the Freedom Online Coalition.

Human rights are both enabled and endangered online. These two extremes will shape online human rights in 2023 with the following specific developments:

The main challenge will be strengthening the application of existing human rights rules online while charting well-balanced regulations for new areas (e.g. regulations that encourage ethical neuroscience developments while protecting human dignity and integrity).

In 2023, we can expect the USA and countries parties to the Declaration for the Future of the Internet to focus on the implementation of freedom of expression, privacy protection, and other first generation human rights. The EU will continue putting emphasis on the protection of data and privacy. In addition, the EU will work more on linking human rights issues to standardisation and to other ways in which technology is developed.

A holistic approach to digitalisation and human rights will put the question of second generation (economic, social and cultural rights) and third generation (environmental and intergenerational) of human rights in the spotlight.

The fourth generation of human rights will also become more relevant, triggered by risks as a result of the developments in AI, bio, and nanotechnologies. In September 2022, the UN Human Rights Council adopted a resolution asking for a study ‘on the impact, opportunities and challenges of neurotechnology with regard to the promotion and protection of all human rights, including recommendations on how human rights opportunities, challenges and gaps arising from neurotechnology could be addressed by the Human Rights Council’. The Council of Europe also initiated a policy debate on neurotechnologies.

More new angles and aspects will be introduced into the work of the UN human rights bodies. For example, digital inclusion and internet access will gain additional prominence in the context of promoting and protecting the rights of marginalised groups, youth, women, and people with disabilities.

As human rights issues are increasingly brought up in standardisation discussions, there will be a push for human-rights-by-design approaches to be embedded into technical standards that form part of the design and development process of new hardware and software.

It is difficult to predict how human rights online will develop in 2023, as the internet and technology are constantly evolving. However, it is likely that there will be an increased focus on protecting human rights online, with governments and organizations around the world taking steps to ensure that individuals have access to a safe and secure online environment. This could include initiatives such as increased regulation of online content, improved data protection laws, and greater enforcement of existing laws. Additionally, there may be an increased focus on educating individuals about their rights online and providing resources to help them protect themselves from cyber threats.

Dive deeper: Human rights principles

In 2023, there is a growing consensus that something has to be done with content moderation. Apart from this shared concern, most other questions are open: by who, how, and where should content be regulated?

The underlying question is whether social media companies can develop satisfactory self-regulation policies in-house or if they will need to be forced to regulate content, as is happening in Europe with the Digital Service Act.

The US Congress supports amending Section 230 of the US Communications Decency Act (1996), which is the founding document of the social media industry and shields it from responsibility for hosted content.

The year 2023 was a critical one for online content moderation. Major tech companies, including Alphabet, Meta, and X, faced significant challenges due to layoffs, content moderation policy reversals, and new legislation coming into force.

Experts warned that the reversal of content policies at Alphabet, Meta, and X threatened democracy. Media watchdogs highlighted that the layoffs at these top social media firms created a ‘toxic environment’ as the 2024 elections await next year. The layoffs, numbering more than 40,000, were seen as a threat to the health and safety of these platforms. X (formerly Twitter) got onto the EU Commission’s radar for having significantly fewer content moderators than its rivals.

The European Union (EU) led the way in drafting internet and social media laws, including regulations around content moderation. The Digital Services Act (DSA), approved by the Council of the EU in July 2022, enabled Ireland to hold the online platforms accountable for their content. These regulations included the immediate takedown of illegal content, mandatory risk assessments of algorithms, and increased transparency in content moderation practices.

In August, the DSA began implementing strict online content measures on 19 very large online platforms and search engines. These measures ranged from the obligation to label all adverts and inform users who’s behind the ads, to allowing users to turn off personalised content recommendations. The DSA’s impact extended beyond the boundaries of the EU, affecting any company servicing European users, regardless of where it is based.

Major social media platforms were additionally warned about non-compliance in digital diplomacy when Thierry Breton flew to Silicon Valley in August to remind Big Tech CEOs about Brussels’ expectations. Previously, X, for instance, pulled out of the code to tackle disinformation, but Breton insisted on its compliance with the DSA when operating in the EU.

Besides Twitter turning into X, TikTok developments marked this year. In the US, several states introduced legislation to ban the app. Critics argued that banning TikTok may violate First Amendment rights and would set a dangerous precedent of curtailing the right to free expression online.

TikTok, seen as an extended arm of the Chinese government, faced broader backlash as Belgium, Norway, the Netherlands, the UK, France, New Zealand, Austria, and Australia issued guidelines against installing and using TikTok on government devices. In response, China ‘made solemn démarches’ to Australia over the Australian ban on TikTok on government devices.

Content moderation also hit a rather massive bump in late 2023 with the onset of global conflicts when the platforms drew a lot of criticism for failing to combat harmful content. As people grappled with the violence unfolding in Israel and Gaza, social media platforms became inundated with graphic images and videos of the conflict. This made it hard for anyone looking for information about the conflict to parse falsehood from truth.

In response to these challenges, tech companies took various measures. Meta established a special operations centre staffed with experts, including fluent Hebrew and Arabic speakers. TikTok established a command centre for its safety team, added moderators proficient in Arabic and Hebrew, and enhanced automated detection systems. X removed hundreds of Hamas-linked accounts and removed or flagged thousands of pieces of content.

Content moderation saw its day, or should we say days, in court as well. Meta, the parent company of Facebook and Instagram, confronted a legal battle initiated by over 30 US states. The lawsuit claimed that Meta intentionally and knowingly used addictive features while concealing the potential risks of social media use. And in the UK, the Online Safety Act came into effect, imposing new responsibilities on social media companies.

In an interesting development in global digital policy, UNESCO has been working on a set of Guidelines for regulating digital platforms – an international and soft law approach to content policy.

Finally, the ease of content generation with AI has marked a significant risk to the digital information space and especially the upcoming elections, contributing to the spread of fake news, deepfakes, and overall challenging the democratic electoral process. We also saw Meta outline a new policy on AI political advertising.

In 2023, countries and companies will intensify their search for better ways to govern content. This will impact the economy, human rights, and the social fabric of societies worldwide.

On the multilateral level, UNESCO will focus on content as a public good, while the UN Human Rights Council will address content through freedom of access to information.

In the corporate sector, the success or failure of Elon Musk’s Twitter experiment will have far-reaching implications for the future of content governance.

Most initiatives on content governance will try to find a balance between the legal status of social media platforms and their social roles. Legally speaking, these are private companies with very little legal responsibility for the content they publish. Societally speaking, these companies are public information utilities that impact people’s perception of society and politics. Twitter’s founder Jack Dorsey described Twitter as ‘the public conversation layer of the internet’.

Currently in the USA, tech platforms are not responsible for the content they host (as per Section 230 of the US Communication Decency Act). Although there are calls from both parties in the US Congress to revisit this arrangement, content governance in the USA is still in the hands of tech companies. The most important thing to happen in the coming year will be how Musk’s policy experiment with Twitter turns out. If he is successful, he may show that a self-regulation model for content governance is possible. If he fails, it will be a sign that the US Congress has to step in with public regulation, most likely by revising Section 230.

In the EU, content governance has shifted towards public regulation. The Digital Service Act (DSA) introduced new, stricter rules that social media companies will have to follow. Their implementation will start in 2023.

Similar to GDPR and data regulation, many countries are likely to take inspiration from the EU’s DSA approach to content governance.

On a multilateral level, UNESCO will host the conference Internet for Trust: Regulating Digital Platforms for Information as a Public Good in February 2023 as the next step in developing content governance around its Guidance for regulating digital platforms: A multistakeholder approach.

It is difficult to predict whether Elon Musk will be successful in developing content governance at Twitter. However, given his track record of success in other areas, it is likely that he will bring a fresh perspective and innovative ideas to the table. Ultimately, the success of any content governance system will depend on the implementation and enforcement of the policies.

Dive deeper: Content policy

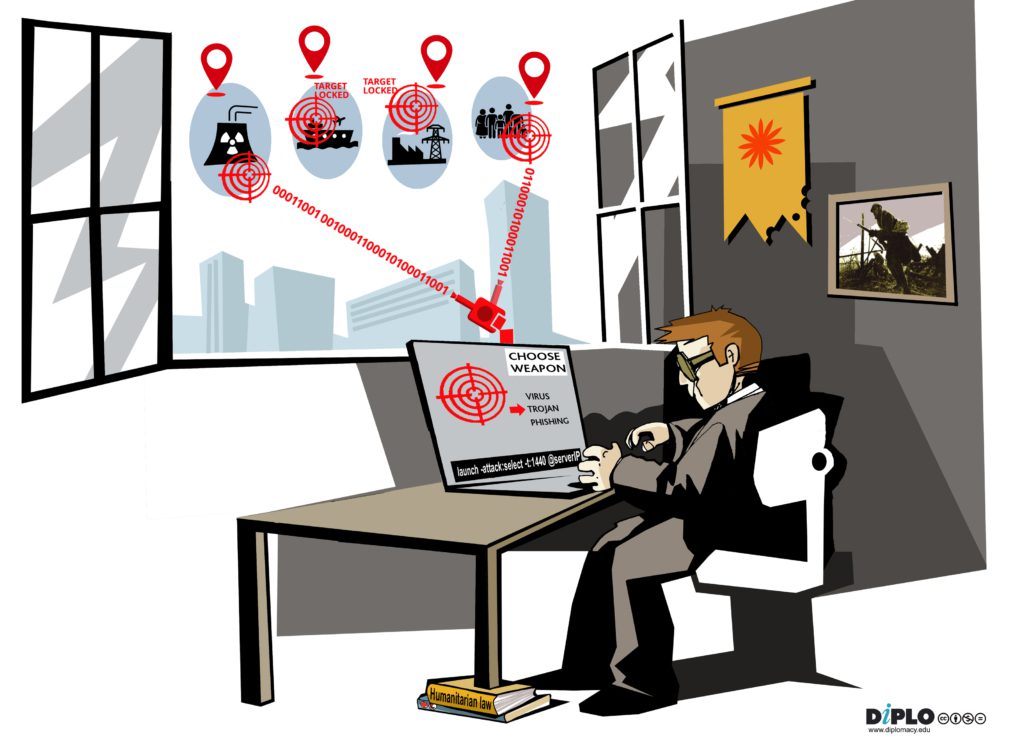

In 2023, cybersecurity has emerged in a wide range of contexts, from an increasing number of wars and conflicts (Ukraine-Russia, the invasion of Gaza, etc.) to cyberespionage and cybercriminal activities.

While the absence of a full-fledged cyberwar is welcome news, cyberoperations have been integrated into military operations as well as hybrid conflicts, with an increasing number of governments being targeted online. AI’s rapid development has resulted in new types of cybersecurity threats.

In 2023, we’ve seen a rise in cyberattacks across the board. Cyber operations aimed at nations were plentiful this year. Some examples include cyberattacks on the government websites of Colombia, Italy, Sweden, Senegal, and Switzerland. A ransomware attack on Sri Lanka’s government cloud system resulted in data loss for 5,000 affected accounts.

Critical infrastructure was also targeted: for instance, Martinique faced weeks of disruptions due to a cyberattack that affected its internet connectivity and critical infrastructure. The Five Eyes cyber agencies attributed cyberattacks on US critical infrastructure to the Chinese state-sponsored hacking group Volt Typhon, which China has denied.

Cyber espionage groups were also busy: the USA and South Korea issued a joint advisory warning that North Korea uses social engineering tactics in cyberattacks. A trove of leaked documents, dubbed the Vulkan files, have revealed Russia’s cyberwarfare tactics against adversaries such as Ukraine, the USA, the UK, and New Zealand. The heads of security agencies from the USA, the UK, Australia, Canada, and New Zealand, collectively known as the Five Eyes, have publicly cautioned about China’s widespread espionage campaign to steal commercial secrets.

On the cybercrime front, many organisations were struck by cyberattacks this year, too many to enumerate here. Ransomware has reached record levels due to the exploits of the MOVEit software. This hack and the attack on 3CX have again highlighted that supply chain security is a significant concern for industries and the public sector.

We’ve also seen claims that threat actors use AI in cyberwarfare and cybercrime to spread disinformation online, craft sophisticated fake images and videos for phishing campaigns, and create malware.

Cyber threats are transnational by nature, and so nations have banded together to tackle them. There have been some wins for the ‘good guys‘ in fighting cyber threats. For example, joint operations disrupted the Russia-linked Hive ransomware network and the Qakbot malware botnet. International law enforcement agencies also seized the dark web’s Genesis Market, which was popular for selling digital products to malicious actors. Multilateral efforts are also underway, such as the CRI, the UN OEWG (which deals with the responsible behaviour of states in cyberspace) and the Ad Hoc Committee on Cybercrime (which is mandated to draft a resolution on cybercrime).

In 2023, the OEWG has reached the mid-point of its mandate. The group held its fifth and sixth substantive sessions in 2023, and it is making headway on capacity development and confidence-building measures (CBMs). Cyber capacity building is seen as crucial in enabling countries to identify and address cyber threats while adhering to international law and norms for responsible behaviour in cyberspace.

Countries have therefore been able to determine some of the next steps, including a) a mapping exercise planned for March 2024 that aims to survey global cybersecurity capacity building initiatives comprehensively and b) a roundtable scheduled for May 2024 showcasing ongoing initiatives, creating partnerships, and facilitating a dynamic exchange of needs and solutions. There’s widespread support for establishing the global Points of Contact (PoC) directory as a valuable CBM, so much so that countries will be invited to nominate PoCs at the beginning of 2024. Dedicated meetings on the PoC are also planned, including an informal online information session on the directory in February and an intersessional meeting to discuss stakeholders’ role in the PoC directory in May.

With the concluding session of the Ad Hoc Committee on Cybercrime (AHC) approaching in January 2024, establishing an international convention on cybercrime seems so close, yet contentious issues remain. The main question from 2022 is: did the AHC achieve a draft text of the convention in 2023? Yes, after three sessions in January, April, and August of 2023, there is a draft text of the convention, with the latest revised in November 2023.

However, as international human rights organisations, including Article 19, have warned, if offences expand to any crimes, it could result in repressive tools and authoritarian governments. This is mainly because the offences considered under the preamble of the draft text of the convention are not limited to cyber-dependent crimes which rely on cyber elements for their execution.

Another issue relates to the cross-border cooperation between states stipulated under Article 40 of the draft text of the convention, which allows the exchange of information about individuals who have committed a criminal offence under the convention. This lack of data protection measures raises the possibility of introducing additional surveillance powers, potentially conflicting with international legal obligations, especially for states party to the Budapest Convention (Convention on Cybercrime), currently the only internationally binding legal instrument on cybercrime. Therefore, conflicts with existing international instruments would be inevitable if laws are not harmonised.

Inasmuch as we’d like the internet to be a more secure space, there are at least two factors hindering everyone’s efforts:

The good news is that countries worldwide, especially in Africa and Asia, are increasing their cybersecurity protection. It’s also promising to see UN negotiations on cybersecurity continue. The risks are major, but solutions are emerging.

The Ukraine war, which dominated much of 2022, escalated beyond its borders. The war has been fought both on the ground and online, mostly through cyberattacks. The cyber risks we warned about in the early days of the conflict—from misattribution of cyberattacks to cyber-offences against third countries’ critical infrastructure or companies—remain a viable threat.

Against this geopolitical backdrop, there are two main reasons why it’s an uphill struggle to make the internet a more secure space.

The first is that we are increasing our dependence on technology more rapidly than ever. For example, we’ve moved everything and anything to the cloud, which once looked safe, but now is becoming less so (as the attacks against and breaches into the cloud services of Twitter, Uber, Revolut, and LastPass confirm). Attackers have become more resourceful and determined to breach anything.

The second is that technology is getting even more complex:

In our rush to innovate, many of these emerging technologies still lack security standards and good practices, and are easy targets.

There is room for optimism, however. Many governments and institutions have strengthened their cyber-resilience in response to the threat of more severe and impactful attacks, such as those seen during the Ukraine war. Developing countries are becoming more interested in the cybersecurity agenda, and their increased participation in global processes could put pressure on the leading cyber powers to act more responsibly.

In the UN Ad Hoc Committee on Cybercrime, whose main goal is to make the first draft of an international convention on cybercrime, countries have made some progress on provisions related to the definitions of cybercrime, procedural measures, law enforcement, and the applicability of international human rights provisions. And while there are many divergent positions, the overall momentum seems promising, and we may hear more good news from Vienna and New York in 2023.

So far, there have been three sessions, and a consolidated negotiating document has been prepared by the committee Chair with the support of the Secretariat. The document is a compilation of states’ proposals regarding the general provisions, criminalisation, procedural measures, and law enforcement of the draft convention.

A second consolidated negotiating document will be prepared in 2023, based on the outcomes of the committee’s third session regarding international cooperation, technical assistance, preventative measures, mechanisms of implementation, and final provisions of the convention. Basically, these combined documents could be used as a starting point for member states to write the convention, which is expected to be presented to the UN General Assembly (UNGA) at its 78th session in September 2023.

One of the main debates that is expected to take place before the finalisation of the draft convention is the criminalisation of offences. In brief, one group of states is proposing that the convention is limited to criminalising cyber-enabled and cyber-dependent crimes, while a second group wants to expand the convention’s competence to other offences, including cyberterrorism. If this debate turns into an impasse, states could either agree to go with a limited scope and leave room for further negotiation down the line, or to extend its scope through an optional protocol.

Another issue is the protection of human rights and fundamental freedoms. Human rights organisations, such as Human Rights Watch, raised concerns over the protection of human rights when countering cybercrime, and are calling for more efforts to ensure their effective protection.

The UN Open Ended Working Group (OEWG) on cybersecurity is a horse of a different colour, which has also made good progress on its mandate. The OEWG, which is half-way through its five-year mandate, has a busy calendar in 2023, with two substantive sessions, more informal consultations on a Points of Contact (PoC) directory, and another annual progress report.

Another process in the spotlight will be the Programme of Action (PoA), a UN-driven action-oriented mechanism envisioned to provide concrete support for the implementation of agreed cyber norms. The UN General Assembly First Committee declaration of 5 November 2022 on PoA on cybersecurity made the establishment of the PoA a reality. However, its scope, structure, and content are all up for discussion which will intensify in 2023 ahead of 2025 when PoA is supposed to start following conclusion for the work of the OEWG (2021-2025).

In 2023, we can expect to see a continued focus on the development of artificial intelligence (AI) and machine learning (ML) technologies to help detect and prevent cyberattacks. We can also expect to see an increased emphasis on cloud security, as more organizations move their data and applications to the cloud. Additionally, we can expect to see an increased focus on user authentication and identity management, as well as the development of more secure authentication protocols. Finally, we can expect to see an increased emphasis on data privacy and encryption, as well as the development of new technologies to help protect sensitive data from unauthorized access.

The Geneva Dialogue on Responsible Behaviour (Phase III), a project of the Swiss authorities run by Diplo, will leverage the unique setting of international Geneva to continue the global conversation on roles and responsibilities of stakeholders in cyberspace. The project deep-dives into implementing various existing international norms, confidence-building measures, and principles to ensure cyber-stability – such as reducing vulnerabilities in the digital environment and the supply chain. You can read more here.

Dive deeper: Find out about the latest on the current situation in the UN cybersecurity negotiations (OEWG and PoA)

Even though the digital economy grew less quickly in 2023, it is still on the upswing and on the right track to recovery after 2022. Following the growth of the overall US economy, the digital sector worldwide experienced growth with the positive trend continuing.

AI was the main driver of investment in 2023. Not only did AI applications make a significant portion of investments, but developments around this technology pushed for regulatory overhaul in many areas of the digital economy such as data flow, e-commerce, or consumer protection.

On digital trade governance, the major surprise was the withdrawal of the United States from WTO’s Joint Statement Initiative on E-Commerce.

ITU’s 2023 report ‘Facts and Figures’ recognised that connectivity continued to be more affordable in all regions of the world and for all income groups. However, the report stated that ‘lack of affordability continues to be a key barrier to Internet access particularly in low-income economies. A wide gap persists between high-income economies and the rest of the world’

This was one of the main topics of the 2023 UNCTAD’s eWeek, as some of the biggest world economies such as India, China, and the European Union pledged additional resources to improve the benchmark of their digital economies.

Bilateral agreements around the development of the digital economy have become more common. Japan and the EU strengthened ties in the third High-Level Economic Dialogue, while the US and UK announced the Atlantic Declaration for a Twenty-First Century, with a focus on the digital economy.

Worldwide spending on digital transformation technologies and services continued to grow at the expected rate.

The digital economy will face the full brunt of the economic crisis, with a possible recession in 2023. There will be less money for the next big thing – whatever it may be. As investors opt for safe economic options, digital investments are unlikely to be their table of choice.

Bitcoin, often labelled as the new gold, has lost its allure due to the recent failures of cryptocurrencies. Web 3.0 dynamism has slowed down. Quantum computing is too far away on the horizon to make a major economic impact.

In digital economy governance, the main focus will be on digital trade, data flows, the implementation of the new global tax deal, and the regulation of cryptocurrencies.

As the economic crisis unfolds worldwide, the digital economy will face the full brunt. Things aren’t looking up for the ‘next big thing’ – there’s not much money around.

With so many daunting prospects for the economy, the governance of the digital economy will focus on three main areas: free trade, data flows, and the implementation of the new global tax deal.

The beginning of 2023 brought some of the hardest challenges for the cryptocurrency industry. After the December arrest of Sam Bankman-Fried we predicted a harder regulatory push toward the crypto industry. In February, the US cryptocurrency company Paxos was ordered by the New York Department of financial services (NYDFS) to stop the issuance of the BUSD stablecoin. The BUSD stablecoin was a significant money line for online exchanges as it served as a bridge from the crypto industry to the traditional banking and investment arena.

This stablecoin was closely connected to the world’s biggest crypto exchange Binance, which was again in the focus of US regulators in April when the US Commodity Futures Trading Commission (CFTC) filed a suit against them. The Binance exchange and the company CEO Changpeng Zhao are accused of trading violations. Stablecoin regulation was on the agenda of the G20 Finance Ministers meeting on February 24-25 in India.

They also discussed what we thought would be the focus for 2023, the design and issuing of the Central Bank Digital Currency [CBDC]. The EU is finalising its plans, while the BIS is working with other central banks for the final push.

After the collapse of Silicon Valley Bank, which was a bank of choice for a large part of the US crypto industry, a colder climate was also announced by the Biden administration. The new 2024 Revenue Proposal in the US will consider progressively taxing electricity that is used for cryptocurrency mining. We also predict a sharper focus on the requirements and measures for an environmentally responsible industry.

The European Parliament has approved the Markets in crypto-assets (MiCA) regulation, and comprehensive legislation for the crypto and digital assets industry. Central Bank Digital Currencies (CBDC) continued to be on the central bank’s agenda. The BIS report shows that the financial sector is ready for this innovative technology, while the European Central Bank has announced a two-year preparation phase for the launch of the digital euro.

Cryptocurrency regulation was also in the focus of the G20 group throughout 2023. The G20 group released a joint paper with the IMF, outlining policy and regulatory recommendations to address cryptocurrency regulation. According to a Chainalysis, a crypto research firm, the global adoption of cryptocurrencies is predominantly being led by lower-middle-income countries.

The year 2022 delivered two opposite sides of the cryptocurrency hype cycle. After all-time high values in late 2021, the year 2022 started with a declining trend which proved to be constant throughout the year.

Currently, bitcoin sits at US$16,500, which is an issue for bitcoin miners, as this price is on the edge of the costs of mining. This led one of the largest publicly traded cryptocurrency firms Core Scientific (USA) to file for bankruptcy in December 2022.